Max Tegmark contends that the deliberate downplaying of AI risks by big tech could delay necessary strict regulations until it’s too late

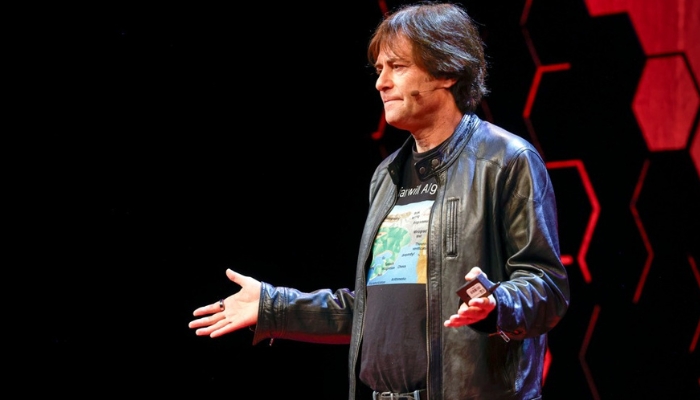

A leading scientist and AI campaigner, Max Tegmark, has cautioned that big tech has effectively diverted attention from the ongoing existential risk posed by artificial intelligence. Speaking to the Guardian at the AI Summit in Seoul, South Korea, Tegmark expressed concern that this shift in focus, from the extinction of life to a broader view of AI safety, could lead to an unacceptable delay in implementing strict regulations on the developers of these highly powerful programs.

Tegmark, a trained physicist, pointed out a historical event: “In 1942, Enrico Fermi constructed the first reactor with a self-sustaining nuclear chain reaction under a Chicago football field. When the leading physicists of that era learned of this achievement, they were deeply concerned. They recognized that the most significant obstacle to building a nuclear bomb had been overcome. They understood that its development was now just a few years away – and indeed, it occurred three years later with the Trinity test in 1945.”

AI models capable of passing the Turing test, where a person can’t distinguish them from human in conversation, serve as a warning about AI that could become uncontrollable. This is why figures like Geoffrey Hinton, Yoshua Bengio, and many tech CEOs, at least in private, are currently alarmed,” Tegmark explained. His non-profit Future of Life Institute led the call last year for a six-month “pause” in advanced AI research due to these concerns. According to him, the launch of OpenAI’s GPT-4 model in March of that year was a warning sign, indicating that the risk was dangerously close.

Despite garnering thousands of signatures, including those of Hinton and Bengio, two of the three “godfathers” of AI who pioneered the machine learning approach underpinning the field today, no pause was agreed upon. Instead, the AI summits, of which Seoul is the second following Bletchley Park in the UK last November, have led the way in AI regulation. “We wanted that letter to legitimize the conversation, and we are quite pleased with how that turned out. Once people saw that even experts like Bengio are concerned, they thought, ‘It’s okay for me to be concerned too.’ Even the person at my gas station told me, after that, that he’s worried about AI replacing us.”

But now, we need to shift from mere discussion to concrete action.

Since the initial announcement of what later became the Bletchley Park summit, the focus of international AI regulation has shifted away from existential risks.

In Seoul, only one of the three “high-level” groups directly addressed safety, examining risks across the spectrum, “from privacy breaches to disruptions in the job market and potential catastrophic outcomes.” Tegmark argues that downplaying the most severe risks is not healthy—and not accidental.

“That’s exactly what I predicted would happen due to industry lobbying,” he said. “In 1955, the first journal articles emerged stating that smoking causes lung cancer, and you would think that there would be some regulation fairly quickly. But no, it took until 1980, because there was this huge push by the industry to distract. I feel that’s what’s happening now.”

AI certainly causes present harms too: bias, harm to marginalized groups… But as UK science and technology secretary Michelle Donelan herself noted, we can address both. It’s akin to saying, “Let’s ignore climate change because there’s a hurricane this year, so we should just concentrate on the hurricane.”

Critics of Tegmark have leveled the same accusation against his claims: that the industry wants to shift the focus to hypothetical future risks to distract from present concrete harms. Tegmark dismisses this notion, stating, “Even if you consider it independently, it’s quite intricate: it would require significant foresight for someone like OpenAI’s boss, Sam Altman, to avoid regulation by suggesting to everyone that there could be catastrophic consequences and then convincing people like us to raise the alarm.”

He suggests that the limited support from certain tech leaders stems from the perception that they’re trapped in an unwinnable scenario. “Even if they desire to cease their activities,” he explains, “they feel powerless to do so. If a tobacco company CEO suddenly feels uneasy about their actions, what can they do? They’ll likely be replaced. Therefore, the only path to prioritizing safety is if the government establishes universal safety standards.”